Data Preparation Strategies & AutoAI Advancements: Watson Studio Essentials

John Q. Todd, Sr. Business Consultant/Product Researcher, Total Resource Management (TRM)

Posted 6/27/2024

Take a moment to get yourself a no-cost account at IBM Watson Studio.

Let us begin this article with a fun disclaimer: No, it has not been generated or “written,” using generative AI tools. Rather a human has typed every letter and made every grammatical and punctuation mistake. (Ok, spell check was turned on, so you got me there.) With that out of the way, now we can talk.

There are many AI and Machine Learning (ML) tools out in the market today, and many more are being created while you have been reading this article. While you may think AI/ML is still an emerging technology, you would be correct, but you may be shocked to realize the growing number and focus on AI/ML tools that are already in existence.

You may be pondering how these new tools could help you in your business or at least the small part of the business that you are responsible for. AI/ML tools are being embedded into many of the well-known software solutions that we have relied upon for many years to keep track of our work activities. Maximo (now MAS), SAP, Oracle, are some examples and the list goes on of the products that now sport some degree of AI/ML feature/functionality.

The question is: What might you need to have in place before being able to take advantage of these new tools, and what might the process to adopt them look like?

Start with the problem you are trying to solve

All projects should begin with a problem statement. What problem are you trying to solve that AI/ML tools can make the solution better, more efficient, more impactful, etc. Maybe you have a need to reduce the cost of something, and you believe that these tools can help. (Always be prepared to be wrong. It’s ok!) Maybe your business has experienced huge costs due to equipment failure in the past and you wish to avoid them happening again. No matter the context, take the time to form your problem statement(s) well. They will be your guide and final judge as to whether your AI/ML project was a success.

I knew you were going to talk about data!

Yes, of course, data is always important. Where do you think AI/ML gets all its responses from? Yes, eventually models are created to process through potentially massive amounts of data to return probabilistically reasonable answers, but where do those models learn from? You got it…data.

Take a moment to get yourself a no-cost account at IBM Watson Studio.

Aim for the “try it free” button and get set up with an account.

Certainly, you can jump right to the Large Language Models (LLM) and the generative tools to produce summaries and new content by asking questions and otherwise prompting Watson to produce results. This can be very useful when it comes to learning, researching, or looking for content to fill a presentation or other media. Yes, go ahead and have Watson make a poem about puppies on Mars and ask it inane questions about history. Get that degree of “exploration,” out of your system!

Getting back to something truly productive, if you are looking to make use of all that data you have collected in your EAM/CMMS system, or from your historians full of telemetry data, then Watson Studio can also be of use. Don’t think that “data,” is limited to numeric values. All of those long descriptions, log entries, and even attached documents can be valuable sources of insight once the language models get their hands on it.

What the process looks like – data first

Here are some new ideas and skills you will need to dig into, so you fully understand the process you are going through. Let’s assume you have sets of equipment telemetry that you wish to use to either predict future values or discover/warn of anomalies. Here is the problem statement:

“In the past we have experienced several costly equipment failures. Given the last year of telemetry data from the temperature, vibration, and rotation speed sensors on Set A of our production equipment, we want to visualize anomalies and predict failures at least 30 days out.”

Now go find your source(s) of data. Data might be stored in several places, ranging from spreadsheets to operational databases, all the way out to cloud storage locations. In the past you may have needed to transform data from its raw state into different formats before being useful. Hang on to those Extract, Transform, and Load (ETL) skills from the 1990’s as they are still needed!

The Watson Studio provides tools that assist you in taking raw data sources and transforming them into useful data sets. Then the AutoAI function can use the data to analyze and determine the best model to apply for prediction and anomaly detection.

Find the Work with Data tile in Watson Studio to continue the journey.

As a side note, if you do not have a source of data to work with, you can use Watson Studio to generate rather detailed “simulated” data that can follow well-known distributions and other data formation models. Yes, it is “fake,” data, but if you have nothing to start with it is good for learning.

Determine the best model for your data in Watson Studio

Once you get the Project defined, now it is time to bring in your raw data set(s). They will simply be Assets available to you in the Project. Watson Studio projects consist of Assets such as data, models, flows, notebooks, etc. that you create, import, edit, and in some cases, execute Jobsupon.

On the Assets tab, bring in the data source(s) you have using the Data in this project section on the far right.

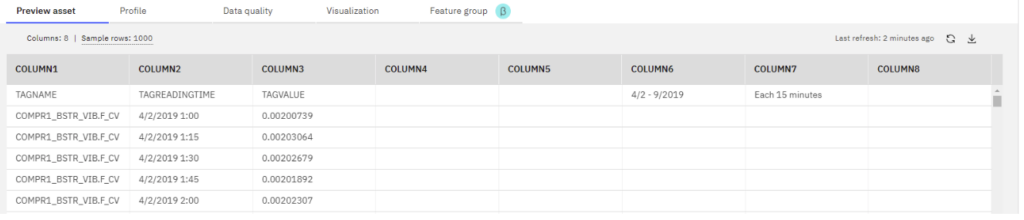

Now go ahead and open the new data asset. In the default Preview tab, you see a listing of the data set you imported. In this case, we have brought in a week’s worth of vibration data that was captured every 15 minutes from a set of sensors on a piece of equipment. Feel free to explore the Profile, Data Quality, and the Visualization tabs, but for our purposes, we are going to go right to the Prepare data icon in the upper-right corner. Remember the mention of ETL? Well, here we go!

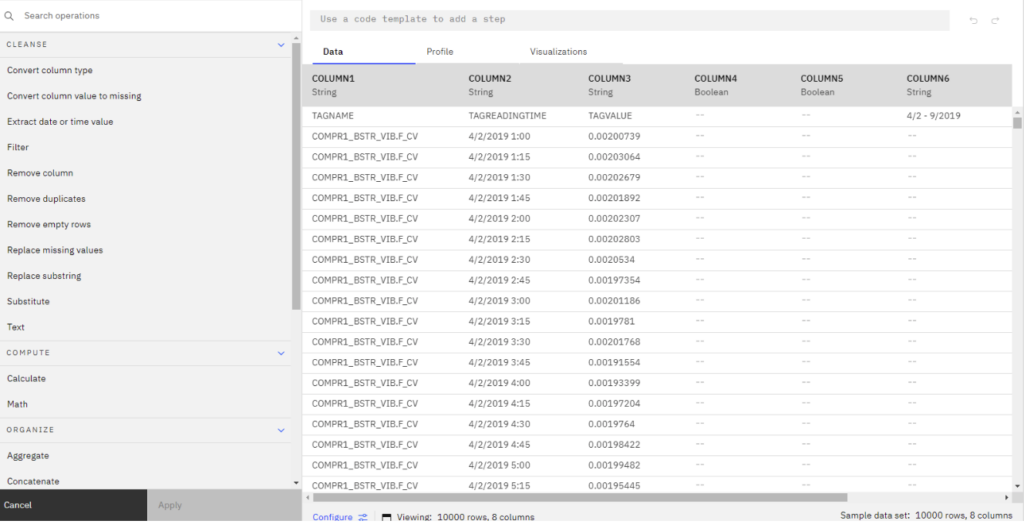

When you launch Prepare data, you are taken to the Data Refinery feature of Watson Studio. Here you can do many things to transform the data. The real advantage is that you can bring in further sets of data in similar conditions and run them through the Steps of transformation you have described. You can do the changes manually, or with a series of Steps. If done manually, the Data Refinery records the Steps you took so you can run them again. These ETL sessions are saved as a “flow” Asset in your project.

For our purposes here, we are going to do several things: remove the first row, remove the extra columns, and remove any rows with zero values. These are rather common actions to take. Of course, it is best if your incoming data is already formatted for immediate use, but the Data Refinery helps you define and reuse those requirements so that you can also communicate to those providing you with the data.

After you have defined the transformations, Saved then run the Job, you get a new data asset that is called “shaped.” You have the original raw data, a “flow” to transform it, and the resulting shaped data ready for model analysis. Oh, how the excitement builds!

Finding the right model – AutoAI

Remember our goal is to use this historical data to predict the future. We need the correct statistical model to do that. Since none of us are statisticians by trade, let’s employ the AutoAI feature of the Studio to help us out. It is designed to fast forward your development.

Add a new Asset to your project by selecting the AutoAI tile. Here is where you will begin “experimenting,” with which model suits your data best.

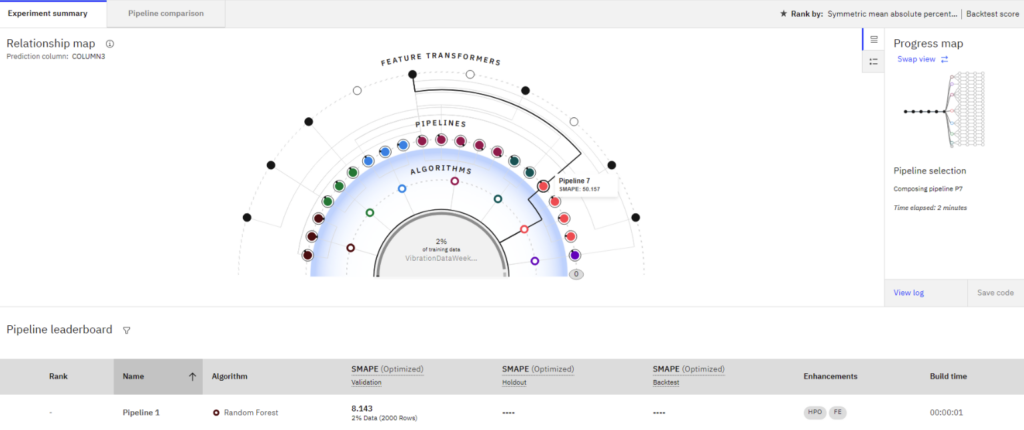

Bring in your shaped data asset, then choose Time Series. Then choose either Forecast or Anomaly Detection depending on your goals… remember your problem statement?

The experiment will begin after you answer a few questions. After a few moments you will be presented with a screen like the one below. This process can take quite a while for all the pipelines to be evaluated, so be patient. Wait for the ranking on the far left to have a “star” next to the best model.

What is happening here is the AutoAI feature is looking to match the data set with the best model it can use for either forecasting values or anomaly detection depending on what you selected.

When the process is complete the “winning,” pipeline will be presented at the top of the list in the bottom section. From there you can have the system generate a Model or a Notebook for further use.

Wrap up: Watson Studio Essentials

We leave you at the half-way point of the process, saving the next steps and the use of the model for a future article. Our goal was to get you into the Watson Studio with a common thing to do… to ETL your data and begin to use it for AI/ML purposes.

TRM and IDCON have been working with client data in support of decision-making processes for many years, using many different tools. With the advent of AI/ML tools, we can leverage our many years of experience across industries to help you make the most of these tools as well.

John Q. Todd

John Q. Todd has nearly 30 years of business and technical experience in the Project Management, Process development/improvement, Quality/ISO/CMMI Management, Technical Training, Reliability Engineering, Maintenance, Application development, Risk Management, & Enterprise Asset Management fields. His experience includes work as a Reliability Engineer & RCM implementer for NASA/JPL Deep Space Network, as well as numerous customer projects and consulting activities as a reliability and spares analysis expert. He is a Sr. Business Consultant and Product Researcher with Total Resource Management, an an IBM Gold Business Partner – focused on the market-leading EAM solution, Maximo, specializes in improving asset and operational performance by delivering strategic consulting services with world class functional and technical expertise.

Related Articles

Keys for Effective Troubleshooting

Analyzing Semiconductor Failure

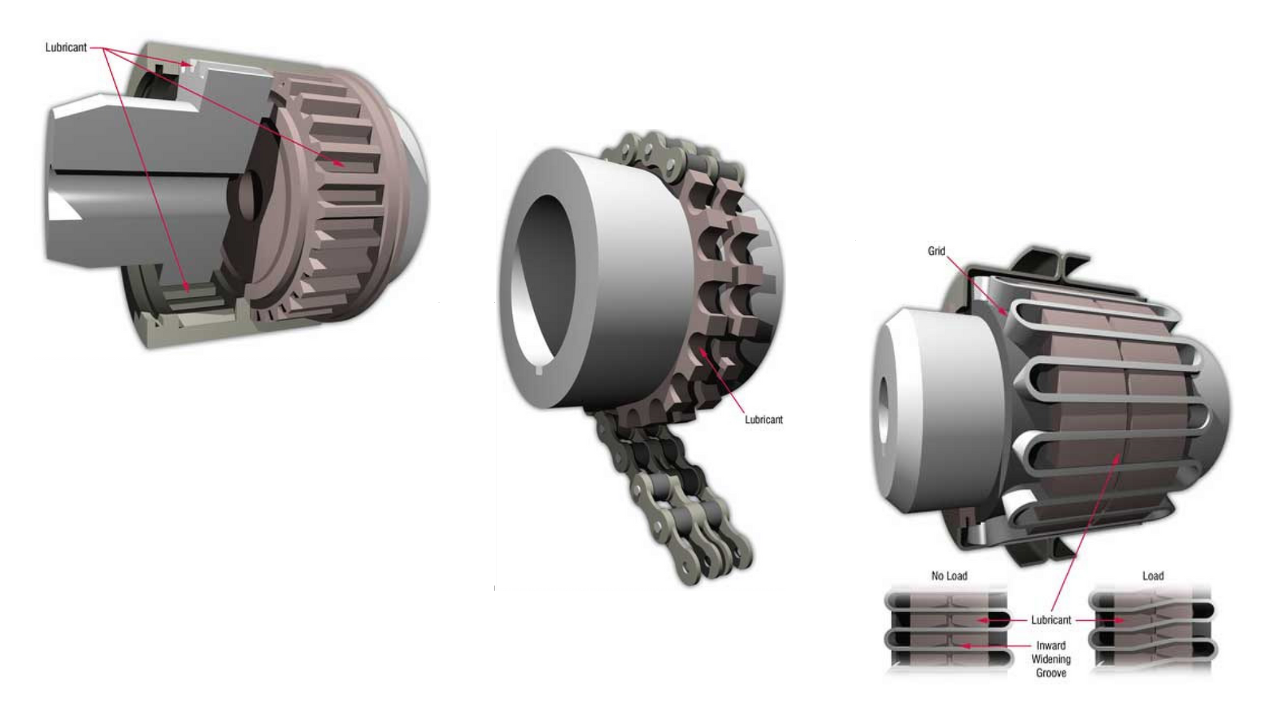

The Lubrication Requirements of Couplings

What is the True Downtime Cost (TDC)?

Improvement: What Comes First?

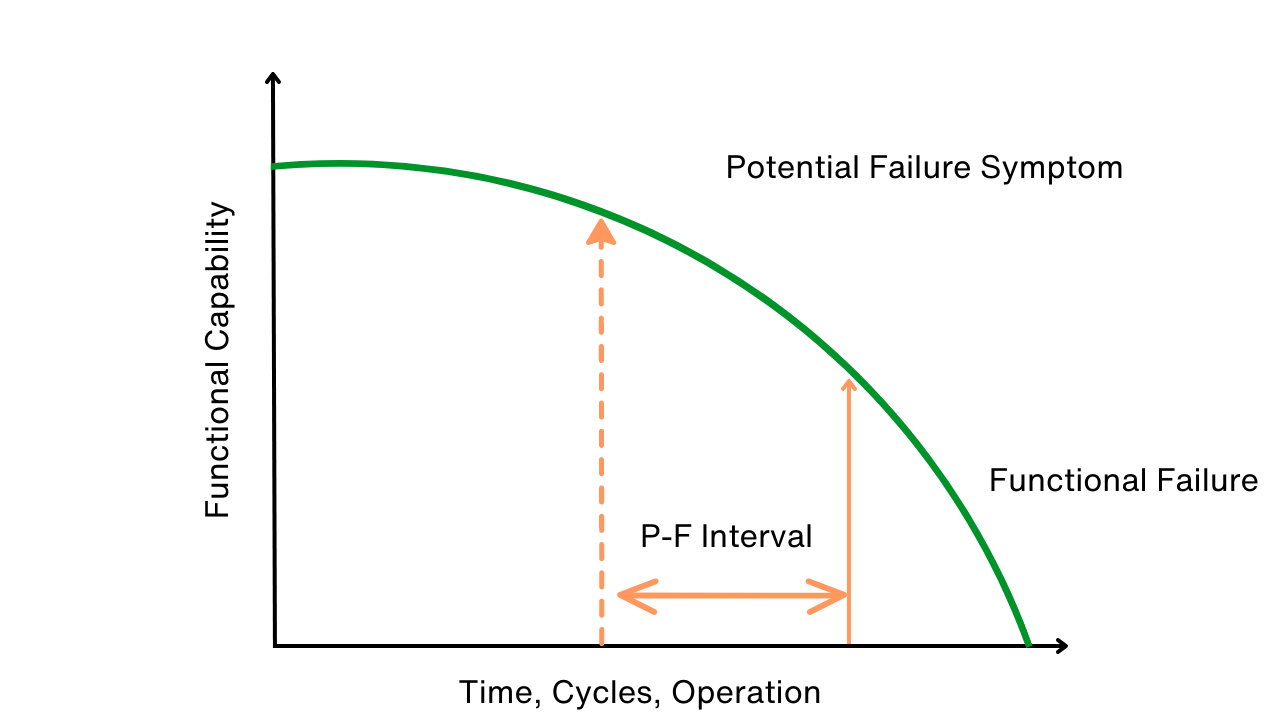

Use P-F Intervals to Map, Avert Failures